Key takeaways

- Turn messy Indian bank narrations into structured, ledger-ready data by systematically decoding IMPS, NEFT, RTGS, and UPI identifiers, mapping UTRs, and standardising fields.

- Prioritise UTR reference mapping for reconciliation accuracy; see UTR reference mapping for a practical playbook.

- Adopt a hybrid approach: regex for strict identifiers (UTR, RRN, IFSC, GSTIN, PAN), machine learning for fuzzy entities and categorisation, and rule-based validators for checksums and consistency.

- Enrich narrations with GSTIN and PAN to unlock compliance, TDS, and GSTR workflows, while masking sensitive data beyond the last 4 characters in UI and logs.

- Design a canonical schema for consistent outputs, track confidence scores, and maintain versioned rule sets to ensure auditability and drift control.

- Plug standardised data into Tally or Zoho for auto classification, invoice linking, AR/AP ageing, and dashboards, cutting manual effort by 70%+.

Why narrations matter in India (Context and pipeline placement)

Bank narrations in India are more than descriptive text, they are the backbone of reconciliation, GST audit trails, vendor identity, and AR/AP tracking. When parsed well, they become searchable, ledger-ready fields that auto post to your accounting system, powering cash flow, revenue vs expense, and ageing dashboards.

Pipeline placement: ingestion → parsing → enrichment → classification → reconciliation → sync to Tally/Zoho → dashboards.

Without proper parsing, every NEFT needs human review, every UPI needs vendor matching, and IMPS entries land in suspense. With consistent narration standardisation, TDS becomes accurate, GST filing becomes manageable, and month-end panic gives way to predictable close.

Primer: Anatomy of Indian bank narrations

Indian narrations hide valuable metadata behind inconsistent templates and OCR noise. Key tokens include DR/CR markers, UTR for NEFT/RTGS, RRN for IMPS, UPI transaction IDs, IFSC codes, masked account hints (XXXX1234), and VPAs like name@ybl or abc@okhdfcbank. Fee markers (CHG, COMM), refund indicators (REV, RVS), and invoice hints (INV, REF#, BILL NO) often appear.

Each bank uses different templates, and OCR adds quirks such as O↔0 or I↔1. Effective standardisation accounts for these variations with normalisation, transliteration, and token dictionaries.

Example NEFT narration: “NEFT DR UTIB0000123 VENDOR PAYMENTS LTD INV2024001 UTR HDFC24001234567890 CHG 5.90”

Extracted fields: rail_type=NEFT, direction=DR, IFSC=UTIB0000123, counterparty=VENDOR PAYMENTS LTD, invoice_ref=INV2024001, UTR=HDFC24001234567890, fee=5.90

Rail by rail decoding: IMPS, NEFT, RTGS, UPI

IMPS

IMPS uses a 12 digit RRN, with P2A or P2P markers, and often includes timestamps in DDMMYYHHMM. Wallet intermediaries and agent banking can obscure the true counterparty.

Example: “IMPS P2A RRN 230145678901 FROM JOHN DOE MOBILE 9876XX1234 TO AC XXXX5678 IFSC SBIN0001234”

Extracted: rail_type=IMPS, RRN=230145678901, counterparty=JOHN DOE, recipient_account=XXXX5678, recipient_IFSC=SBIN0001234

NEFT

NEFT includes UTR references, typically 16 or 22 alphanumeric chars with bank prefixes. Disambiguate same day, same amount collisions using UTR + IFSC + beneficiary name.

Example: “NEFT CR UTR SBIN24012345678901234 FROM CUSTOMER ABC PVT LTD IFSC HDFC0001234 INVOICE 2024/001”

Extracted: rail_type=NEFT, direction=CR, UTR=SBIN24012345678901234, counterparty=CUSTOMER ABC PVT LTD, sender_IFSC=HDFC0001234, invoice_ref=2024/001

RTGS

RTGS carries 22 character UTRs for high value transfers, often with related fee lines nearby that should be linked.

Example: “RTGS DR UTR HDFC2401234567890123456 TO SUPPLIER XYZ LIMITED PURPOSE VENDOR PAYMENT”

Extracted: rail_type=RTGS, direction=DR, UTR=HDFC2401234567890123456, counterparty=SUPPLIER XYZ LIMITED, purpose=VENDOR PAYMENT

UPI

UPI narrations show VPAs like abc@okhdfcbank or xyz@ybl, with 12 character transaction IDs. Distinguish push, collect, mandates, merchant QR, and refunds that may link back to original references.

Example: “UPI/supplier@okhdfcbank/SUPPLIER NAME/INV001/240112345678/SUCCESS”

Extracted: rail_type=UPI, counterparty_VPA=supplier@okhdfcbank, counterparty_name=SUPPLIER NAME, reference=INV001, txn_id=240112345678, status=SUCCESS

UTR reference mapping: Backbone of reconciliation

UTR reference mapping is the foundation of automated reconciliation, acting as the primary key to link bank entries to invoices or bills. Extract UTRs with strict regex, validate with amount, date, and rail type, then match via counterparty and expected due windows.

Use a priority stack: exact UTR match, UTR + amount tolerance, UTR + fuzzy counterparty, and fallback to IFSC/VPA + amount + date window. Maintain a dedup store keyed by (UTR, amount, date), and link fees, reversals, or refunds by proximity and echoed references. For a broader look at industry-grade parsing, see how Plaid parses transaction data.

Enrich with GSTIN and PAN for compliance grade records

GSTIN and PAN enrichment unlocks compliance, ITC trails, TDS accuracy, and audit readiness. PAN format is 10 characters: AAAAA9999A, while GSTIN is 15 characters with a 2 digit state code, embedded PAN, an entity code, typically Z, and a checksum.

- Validate GSTIN checksums, verify PAN structure, and run OCR confusion passes (O↔0, I↔1, B↔8) with confidence scoring.

- Link to vendor master via fuzzy name matching, triangulate using IFSC/VPA + GSTIN/PAN, and flag mismatches between names and statutory IDs.

Example enrichment: From “UPI/vendor@ybl/ACME ENTERPRISES/32ABCDE1234F1Z5/INV001/240112345678/SUCCESS”, extract GSTIN 32ABCDE1234F1Z5, PAN ABCDE1234F (inside GSTIN), and resolve the vendor via VPA and fuzzy matching. Mask and role-lock sensitive IDs in UI and logs.

Narration standardisation: From chaos to a canonical schema

Adopt a canonical schema with fields like rail_type, direction, booking_date, value_date, counterparty_name, counterparty_type, instrument (VPA/IFSC/account_hint), UTR/RRN/txn_id, GSTIN, PAN, invoice_ref, purpose_category, amount_currency, fees_flag, refund_flag, and notes_raw.

- Normalise casing and whitespace, remove non-informational symbols, preserve originals for audit, and transliterate Indian scripts while storing both forms.

- Maintain bank token dictionaries (CHG=fee, REV=refund, TRF=transfer), version your rules, and store field-level confidence scores.

Before: “UPI/9876543210@ybl/ACME ENTERPRISES/32ABCDE1234F1Z5/inv-001/Success/240112345678”

After: rail=UPI, direction=DR, counterparty=ACME ENTERPRISES, type=vendor, instrument=9876543210@ybl, gstin=32ABCDE1234F1Z5, pan=ABCDE1234F, invoice_ref=inv-001, txn_id=240112345678, purpose=vendor_payment, confidence=0.92

Regex versus machine learning: Choosing the right tool (and why hybrid wins)

Regex is precise for strict identifiers like UTR, RRN, IFSC, GSTIN, and PAN, but it is brittle with OCR noise, multilingual tokens, and drifting formats. Machine learning excels at fuzzy entity extraction and categorisation, but needs labelled data and drift monitoring.

Recommended strategy: regex for strict tokens, ML (CRF or transformers) for counterparty names and categories, and rule-based validators for checksums and consistency. For context and trade-offs, compare regex vs AI-based detection, regex vs libraries vs OCR, data parsing approaches, how Plaid parses transaction data, and a recent peer-reviewed evaluation.

- Track metrics: per-field precision/recall, end-to-end auto classification rate, reconciliation success, and drift alerts when precision dips.

Implementation blueprint you can adopt

Ingestion: Accept PDF, CSV, Excel, and images with bank-aware OCR profiles, see bank statement OCR software for India. Handle Hindi and regional scripts, and deduplicate by content checksum.

Parsing pipeline: pre-clean → tokenise → regex pass (UTR, RRN, IFSC, GSTIN, PAN) → ML NER for names and categories → conflict resolver → standardiser → validator. Prefer higher-confidence outputs and tie-break with checksum and date/amount consistency checks.

Entity stores: bank token dictionaries, VPA directory, IFSC registry, and vendor master with GSTIN/PAN and aliases.

Reconciliation hooks: UTR mapping to invoices with configurable tolerances, support partials, credit notes, and refunds via reference tracking. For design inspiration, review how Plaid parses transaction data.

Observability and security: log field-level confidence, create review queues, capture feedback for retraining, and align with ISO 27001/SOC 2. Mask PAN/GSTIN beyond last 4 characters and enforce role-based access.

Edge cases and how to handle them

- Split settlements and aggregator payouts: Batch settlements mean one invoice can map to multiple UTRs. Link by merchant VPA, settlement dates, and amount patterns, and aggregate to determine closure.

- Reversals and chargebacks: UPI refunds often generate new IDs. Map via refund keywords, amount proximity, and date windows.

- Partial names and masked digits: Use VPA/IFSC + amount + date windows with fuzzy matching and thresholded confidence.

- Same-amount collisions: Disambiguate same-day same-amount transactions with UTR, IFSC, VPA, and timestamps.

- OCR quirks and multilingual text: Run substitution passes (O↔0, I↔1, l↔1, B↔8, S↔5), detect scripts, transliterate, and fall back to ML when regex fails.

- Bank shorthand and legacy codes: Continuously extend dictionaries for CBS codes such as TRF, CHQ, SAL, EMI, and version changes for drift tracking.

How this plays with the Indian accounting stack

Standardised narrations drive auto classification, invoice linking, and dashboards. Clean fields enable accurate predictions of ledgers and GST codes; see ledger mapping automation for Tally and Zoho for examples.

Push classified entries to Tally or Zoho with proper voucher types, fetch pending invoices to boost matching, and power dashboards for revenue, expenses, burn, and cash flow. AR/AP ageing, DPO/DRO metrics, and GST readiness all benefit from consistent parsing.

Practical walkthrough (mini case)

Input (sanitised): NEFT CR UTR HDFC24001234567890 FROM ABC ENTERPRISES INV2024001 AMT 50000 CHG 15.75; UPI/supplier@ybl/XYZ TRADERS/27ABCDE1234F1Z5/BILL-445/240112345678/SUCCESS; IMPS P2A RRN 230145678901 FROM CUSTOMER DEF LTD MOBILE 98765XXXXX SALARY; RTGS DR UTR SBIN2401234567890123456 TO PQR SUPPLIERS PURPOSE RAWMATERIAL; UPI/9876543210@paytm/OFFICE RENT/JUNE2024/240112345679/SUCCESS.

Processing flow: detect rails and extract identifiers, map UTRs to invoices, enrich GSTIN and PAN, standardise into the canonical schema, validate checksums and date/amount consistency, and sync to Tally.

Outcome: 4 of 5 transactions auto-linked to documents, the salary entry auto-categorised, 100% auto-post, 80% reconciliation rate, and confidence scores above 90% for 4 entries.

Tools and software for smart narration parsing

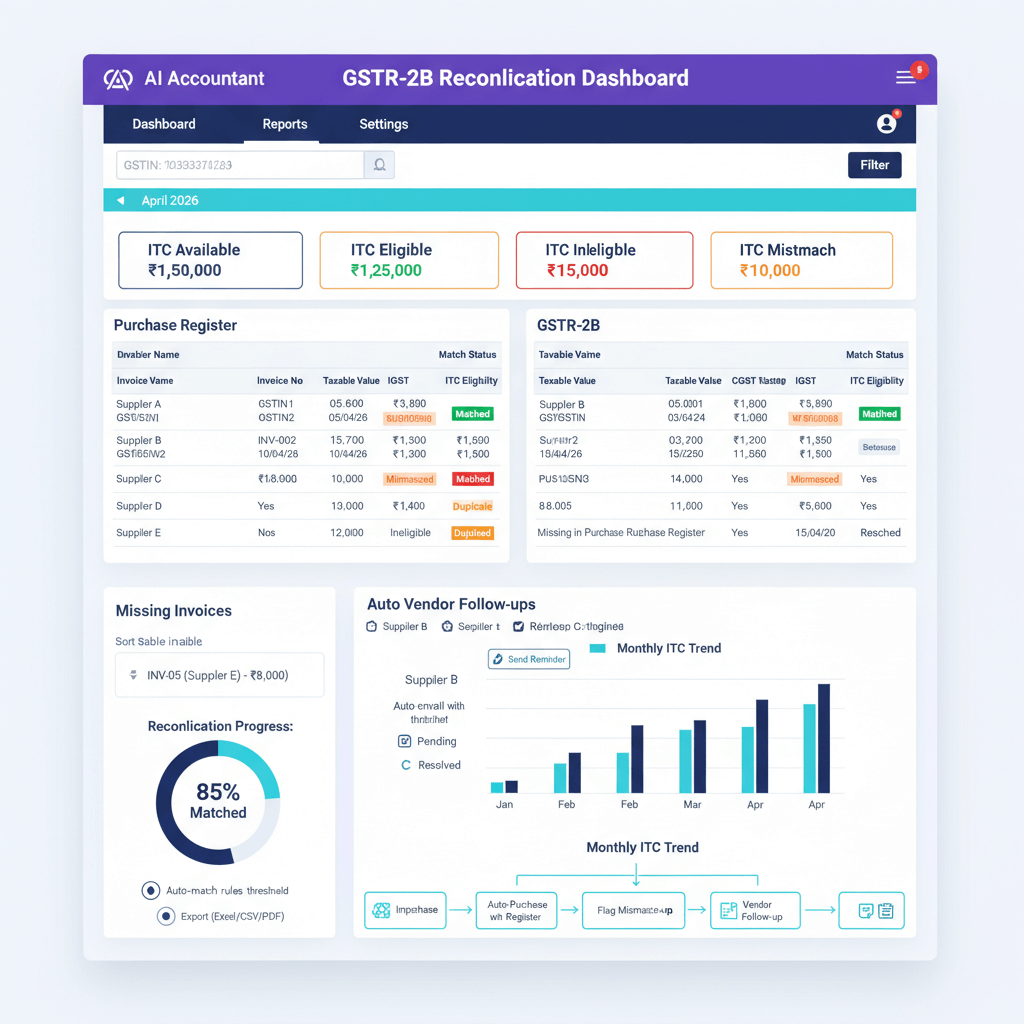

- AI Accountant: Built for India-first parsing, IMPS/NEFT/RTGS/UPI decoding, UTR mapping, GSTIN/PAN enrichment, and native Tally/Zoho integrations, with ISO 27001 and SOC 2 Type 2 coverage.

- QuickBooks: Solid basics, limited India-specific GSTIN/UPI handling, needs customisation.

- Xero: Good categorisation, global-first formats, limited Indian rail depth.

- FreshBooks: Basic parsing, not tuned for Indian split settlements or GST enrichment.

- Tally Prime: Strong reconciliation, manual bank-format setup, limited ML extraction.

- Zoho Books: Bank feeds and matching, lighter on Indian-specific parsing nuances.

FAQ

How do I map a NEFT or RTGS UTR to an invoice in Tally without manual lookup?

Extract the UTR with regex, validate with amount and booking date, then match against open invoices using vendor name and expected due window. A matching priority like UTR exact match, UTR + amount tolerance, and UTR + fuzzy vendor name typically works best. Tools like AI Accountant automate this pipeline and push the reconciled voucher back to Tally.

IMPS entries only show RRN and partial names, how do CAs reconcile these reliably?

Leverage RRN + amount + date window and use VPA/IFSC if available. When names are truncated, apply fuzzy matching against the vendor master with confidence thresholds. If two candidates tie, route the entry to a review queue. How Plaid parses transaction data outlines similar fallbacks that adapt well to Indian rails.

What’s the right tolerance for UTR + amount matching when bank fees are deducted?

For payouts with net settlements, set a fee-aware tolerance band, for example ±0.5% or a fixed ₹100 whichever is higher, and check for adjacent fee lines marked CHG or COMM. Systems like AI Accountant auto-link fee lines to the same UTR to prevent false mismatches.

How can I extract GSTIN and PAN when OCR noise corrupts characters?

Run character substitution passes (O↔0, I↔1, B↔8) before regex, verify PAN structure (AAAAA9999A), compute the GSTIN checksum, and cross-check against your vendor master. Keep both the raw and cleaned tokens for audit. Confidence scores decide auto-accept versus review.

UPI refunds create new transaction IDs, how do I tie them back to the original sale?

Search for refund keywords such as REFUND or REVERSAL, match by amount proximity, and apply a sliding time window relative to the original payment. When present, use any echoed references in the refund narration. AI Accountant maintains reverse indexes to automate these links.

Which fields should I standardise to maximise auto-posting into Tally or Zoho?

At minimum, standardise rail_type, direction, counterparty_name, instrument (VPA/IFSC/account hint), UTR/RRN/txn_id, invoice_ref, GSTIN/PAN, purpose_category, and fees/refund flags. With these fields, auto classification and invoice linking typically jump to 85-95%.

Regex vs ML for Indian bank narrations, what’s the pragmatic split for a CA firm?

Use regex for strict tokens (UTR, RRN, IFSC, GSTIN, PAN) and apply ML for fuzzy names, purpose classification, and noisy OCR text. Add rule-based validators and thresholds to arbitrate conflicts. See regex vs AI-based detection and regex vs libraries vs OCR for trade-offs.

What observability should I set up to keep the parsing system audit-ready?

Log field-level confidence scores, store transformation lineage from raw → cleaned → tokenised → standardised, and version your rule sets. Add drift detection for precision drops and maintain role-based access with masked PAN/GSTIN in UI and logs.

How do I handle same-day, same-amount collisions that confuse reconciliation?

Require at least two corroborating signals beyond amount, such as UTR + IFSC or UTR + VPA, and use timestamps if present. Maintain a short-term buffer to detect duplicates and hold ambiguous entries for human review rather than forcing incorrect auto-matches.

What’s a realistic accuracy benchmark CAs should target for month-end closes?

Expect 90-95% accuracy on structured fields, 80-85% on fuzzy entities, and 85-95% auto classification with a mature hybrid pipeline. With disciplined review queues, firms routinely cut close times by 50-70% and reduce suspense entries drastically.

Can an AI tool auto-categorise rent, salaries, and bank charges from narrations reliably?

Yes, when purpose classification models are trained on Indian narrations and supported by regex token capture for fee markers like CHG and COMM. Systems such as AI Accountant combine ML categorisation with rule-based validators to reach high precision on recurring expenses like rent, salaries, and bank charges.

How should a CA firm phase implementation without disrupting current Tally workflows?

Start read-only: ingest statements, parse, enrich, and reconcile in a sandbox. Validate accuracy on a recent month, then enable write-back of only high-confidence entries. Finally, expand to full auto-post with exception queues. This phased rollout avoids ledger noise and builds stakeholder trust.

-01%201.svg)